Hello, World!

Welcome to the first edition of our fortnightly AI newsletter! Freshers’ weeks have subsided. Assignment deadlines are imminent. Generative AI will undisputedly be tinkered with, whether you're an economics major, a medical student, or studying the fine arts.

The advancement of Artificial Intelligence (AI) will indubitably impact your life in one way or another. A Salesforce’s survey found that 51% of the general population has never used generative AI; so, whether or not you fall within this demographic, the intent of this newsletter is to provide a nuanced understanding of this rapidly evolving field, explained in layman's terms for easy comprehension.

Let’s dive in!

On today’s menu

👂 👀 🗣 ChatGPT can now see, hear, and speak

✅ Microsoft’s Phi-1.5 outperforms bigger models, easing ethical concerns

⚠️ Elon’s AI concerns

🎤 Up your prompt game

👀 🎧 What we’re watching and listening to

Newsroom

Chat GPT can now see, hear, and speak

OpenAI has enhanced ChatGPT with new voice and image capabilities, allowing users to interact more intuitively through voice conversations and visual inputs. This development enables real-time discussions about images, while voice interaction allows for dynamic, user-friendly conversations. These features are being introduced gradually to Plus and Enterprise users, with voice being available on iOS and Android, and image capabilities accessible on all platforms.

ChatGPT’s new features are especially beneficial for students, serving as an autonomous agent that can see and converse, making learning more interactive and intuitive. It can aid in solving problems and visualizing concepts, offering a more accessible learning support that adapts to individual learning preferences, whether visual or auditory, thus enhancing the overall learning experience. See this X (Twitter) thread showcasing this powerful feature.

Tech giants AI concerns

In a closed-door meeting with the U.S. Senate, top tech executives, including Elon Musk and Mark Zuckerberg, expressed cautious approval for government regulation of AI. However, specific frameworks and regulations are still a matter of debate among participants.

This milestone event underscores the growing recognition that AI technologies require oversight. The dialogue between tech leaders and legislators can be instrumental in shaping the future of AI regulation, thereby influencing both the industry and broader societal norms.

Microsoft's New Mini Marvel: Phi-1.5 Outperforms Bigger Models, Eases Ethical Concerns

Microsoft Research has unveiled phi-1.5, a lean but powerful language model boasting 1.3 billion parameters. This compact dynamo not only challenges its larger counterparts in complex tasks but even outperforms them. A noteworthy aspect is its training on high-quality, "textbook-like" synthetic data, offering a way to sidestep ethical quagmires such as content bias or toxicity.

Parameters in a language model like phi-1.5 are elements that the model uses to make predictions, and they’re crucial for understanding language patterns. Tokens, on the other hand, are pieces of text that the model reads, like words or parts of words. This innovation by Microsoft implies a shift towards more ethical AI, focusing on reducing biases and offering more accurate and reliable information. It underscores the importance of responsible AI development in academic learning resources, where unbiased and high-quality information is paramount.

Prompt Engineering

Improved prompting may well score you a match on hinge this year, but it may also better a challenge to your landlord or help you start a newsletter to students.

No, this is not online dating advice. Rather it is a guide to improving your proficiency in the AI tools now at your disposal. Why? Well, as they say, tools are only as good as their users…

The output you get from a generative AI can be greatly influenced and improved given the quality of the input that you provide it. That input is also known as a ‘prompt’, which, when tweaked and tuned, can yield surprisingly more effective results.

A few more minutes spent prompting will save you many more later down the line - I’m afraid time in preparation really is time well spent.

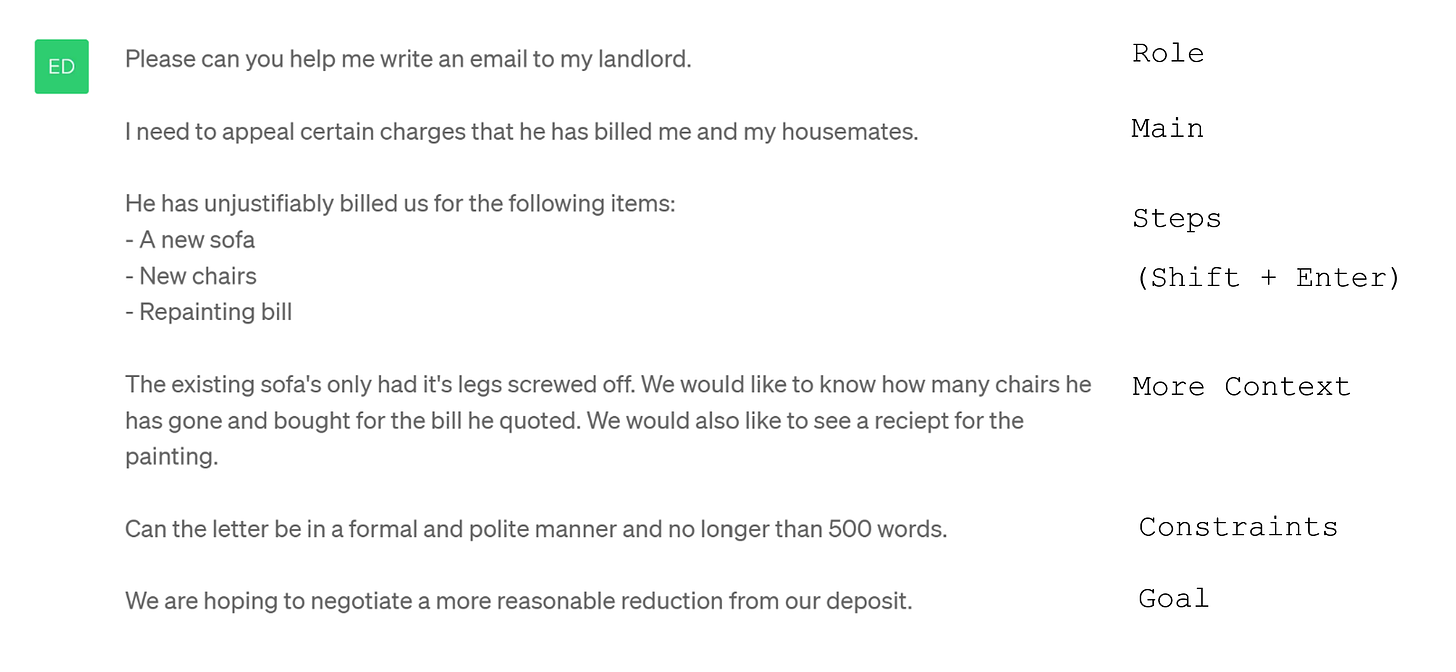

Your input is essentially a framework for the AI to follow; the clearer the framework the closer the chatbot can tailor to your needs.

That all being said let’s have a look at a guide and a couple of examples.

>>> Role – This is what role you need the chatbot to be performing:

“Edit…” | “Teach…” | “Critique…” | “Brainstorm…” | “Summarise…”| “Code…” | . . . the list goes on.

>>> Highlight the main task – these are customisable parameters including things like the length of the output, tone of voice you may wish to get across, style of writing you would like to mimic, audience, persona.

Paragraph I wrote | Teach me about neural networks| Critique my argument | & so on.

>>> Steps (SHIFT + ENTER = NEW LINE)

Bullet points not only encourage you to break down your query into digestible steps.

They’ll also provide a list of deliverables that the AI will know it must meet.

Shift + Enter will start a new line. You’re welcome.

>>> Constraints and parameters – [n] words long / [x] tone/manner / sort of person to receive it.

>>> Goal – try and give the chatbot an idea of what you are trying to get out of this tasks.

>>> Iterate – you are negotiating with an omnipatient agent who is there to help.

Let’s look at an example:

Follow this link to see the output.

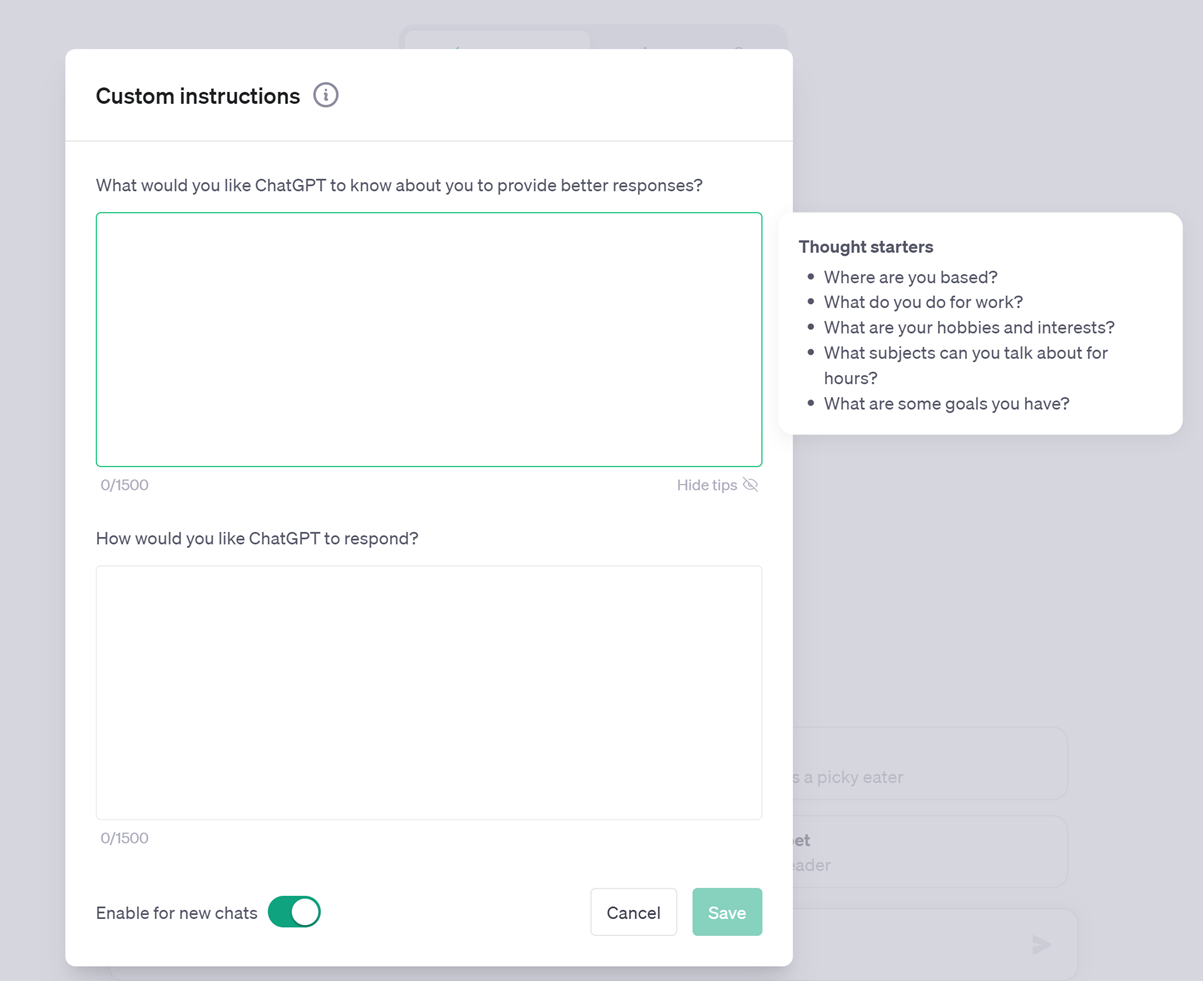

A feature you should be using:

Custom instructions are a game changer. This free-to-all feature is a short-cut to having to customize your prompts time and time again.

Include information such as how long or short should responses generally be, how formal or casual, how much you know about certain topics and more.

Click the three dots in the bottom left hand corner, and at the top of the pop up menu you will find ‘Custom instructions’.

Prompt engineering is a skill that’ll subtly alter the way you interact with chatbots like Chat-GPT, Google's Bard, Anthropic's Claude, and Poe (no, Chat-GPT is not the be all and end all ). A skill that will undoubtedly serve you in good stead.

What we’re watching

Google’s DeepMind AI ‘AlphaGo’ marked a significant milestone in global AI efforts.

This emotional rollercoaster of a documentary perfectly highlights humankind’s evolving relationship with AI, demonstrating a new realm or dimension that machine learning can bring to the table and how it may go on to influence our own lives and perspective of the world we thought we knew…

What we’re listening to

AI, Digital, Data & Disruptive Innovation

Abstract: “In at the moment’s episode, she begins by classes discovered over the previous 25 years working at a famed store like Tudor. Then we dive into subjects everyone seems to be speaking about at the moment: knowledge, AI, giant language fashions. She shares how she sees funding groups incorporating AI and LLMs into their investing course of sooner or later, her view of the macro panorama, and at last what areas of the market she likes at the moment.”

We want to tailor this newsletter to you, so we would love your feedback.

Please feel free to email us at artificiallyintelligent00@gmail.com with any questions or suggestions, and share this with your friends.

Happy Monday,

Cam and Ed